Quickstart¶

Let’s build a basic chatbot using Burr.

While Burr has a host of features related to state management and inspection, this basic tutorial is going to demonstrate two that are particularly relevant to LLM apps.

Specifying user inputs

Persisting state across multiple interactions

This tutorial will set you up to do more complex things, including:

Building an application that makes decisions/handles conditional edges

Persisting state in a database

Tracking/monitoring your application

Generating test cases from prior application runs

So hold tight! This gets you started with the basics but there’s a lot more you can do with little effort.

Note

This should take about 10 minutes to complete, and give you a good sense of the library basics.

You’ll need an OpenAI key set as the environment variable OPENAI_API_KEY. If you don’t have one you can get one at OpenAI.

If you don’t want to get one, check out the simple example of a counter application.

If you want to skip ahead to the cool stuff (chatbots, ML training, simulations, etc…) feel free to jump into the deep end and start with the examples.

🤔 If you prefer to learn by video, check out this video walkthrough using this notebook.

Build a Simple Chatbot¶

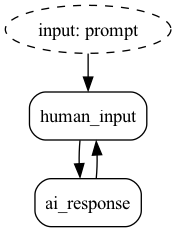

Let’s build! Our chatbot will accept user input and pass it to an AI. The AI will then respond with a message.

Let’s start by defining some actions, the building-blocks of Burr. You can think of actions as a function that computes a result and modifies state. They declare what they read and write.

We define two actions:

human_inputThis accepts a prompt from the outside and adds it to the stateai_responseThis takes the prompt + chat history and queries OpenAI.

# Note: you can replace this with your favorite LLM Client

client = openai.Client()

@action(reads=[], writes=["prompt", "chat_history"])

def human_input(state: State, prompt: str) -> State:

chat_item = {

"content": prompt,

"role": "user"

}

return state.update(prompt=prompt).append(chat_history=chat_item)

@action(reads=["chat_history"], writes=["response", "chat_history"])

def ai_response(state: State) -> State:

content = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=state["chat_history"],

).choices[0].message.content

chat_item = {

"content": content,

"role": "assistant"

}

return state.update(response=content).append(chat_history=chat_item)

Before we proceed, let’s note the following about how we define these actions:

State is a dictionary – actions declare input fields (as strings) and write values to those fields

Actions use a specific immutable state object and call operations on it (

.append(...),.update(...))Functions can do whatever you want – they can use plain python, or delegate to langchain, hamilton, etc… All they have to do is return the new state.

We declare the parameter

prompt, meaning that we will expect the user to passpromptevery time they run the graph.

Note

The action API has a few different forms. We’ve shown the simplest above, but it can also return a tuple of result, state (where result

the intermediate result of the computation), to help with debugging. There are also multiple class-based APIs. See the actions section for more details.

Next, let’s piece together our application. To do this, we’ll use an ApplicationBuilder

app = (

ApplicationBuilder()

.with_actions(human_input, ai_response)

.with_transitions(

("human_input", "ai_response"),

("ai_response", "human_input")

).with_state(chat_history=[])

.with_entrypoint("human_input")

.build()

)

We can visualize the application (note you need burr[graphviz] installed):

app.visualize("./graph", format="png")

Let’s note the following about how we define the application:

It is an infinite loop! It is meant to pause for new prompt input.

We’re just using the function names as the action names. You can also name them if you want

with_actions(human_input=human_input, ai_response=ai_response).We start it with an empty

chat_historystate fieldIt utilizes a builder pattern – this is a bit old-school (comes from the java days), but is an easy/modular way to express your application

Finally, we can run the application – it gives back multiple pieces of information but all we’ll use is the state.

*_, state = app.run(halt_after=["ai_response"], inputs={"prompt": "Who was Aaron Burr?"})

print("answer:", app.state["response"])

print(len(state["chat_history"]), "items in chat")

The result looks exactly as you’d expect!

answer: Aaron Burr was an American politician and lawyer who served as the third

Vice President of the United States from 1801 to 1805 under President Thomas Jefferson.

He is also known for killing Alexander Hamilton in a famous duel in 1804.

Burr was involved in various political intrigues and controversies throughout his career,

and his reputation was tarnished by suspicions of treason and conspiracy.

2 items in chat

If you want to copy/paste, you can open up the following code block and add to a file called run.py:

run.py

import uuid

import openai # replace with your LLM library

from burr.core import action, State, ApplicationBuilder

client = openai.Client() # replace this if not using OpenAI

@action(reads=[], writes=["prompt", "chat_history"])

def human_input(state: State, prompt: str) -> State:

chat_item = {

"content": prompt,

"role": "user"

}

return state.update(prompt=prompt).append(chat_history=chat_item)

@action(reads=["chat_history"], writes=["response", "chat_history"])

def ai_response(state: State) -> State:

content = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=state["chat_history"],

).choices[0].message.content

chat_item = {

"content": content,

"role": "assistant"

}

return state.update(response=content).append(chat_history=chat_item)

app = (

ApplicationBuilder()

.with_actions(human_input, ai_response)

.with_transitions(

("human_input", "ai_response"),

("ai_response", "human_input")

).with_state(chat_history=[])

.with_entrypoint("human_input")

.build()

)

app.visualize("./graph", format="png")

*_, state = app.run(halt_after=["ai_response"], inputs={"prompt": "Who was Aaron Burr?"})

print("answer:", app.state["response"])

print(len(state["chat_history"]), "items in chat")

Finally, let’s open up the UI and see what it looks like (note, that if you have not installed burr[learn] now is a good time…).

burr

You’ll see the UI pop up with projects. Navigate to the UI and explore!

Now that we’ve built a basic application, we can do the following with only a few lines of code:

Add conditional edges – add a condition as a third item in the tuple to the

with_transitionsmethod.Persist state to a database + reload – add a

initialize_fromline to the builder and select a pre-existing/implement a custom persistence method.Add monitoring to track application data – leverage

with_trackerto track to the Burr UI and visualize your application live.Stream results back – minimize time to first token by streaming results back to the user.

Generate test cases from prior runs – use the

burr-testburr-test-case createcommand to automatically generate test cases for your LLM app.